What's the main points of Seq2seq?

2020/10/30

-----

說明:

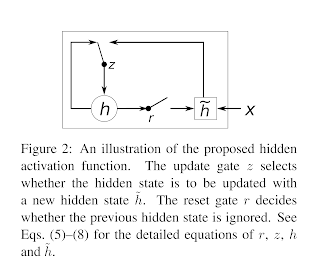

Seq2seq 是採用 LSTM 運算單元的編碼器解碼器架構,主要用於機器翻譯 [1],稍早類似的設計是採用 GRU(簡化版本的 LSTM) 運算單元的 RNN encoder-decoder [2], [3],但是 Seq2seq 名字取的比較好。它以 RCTM 為基礎加以修改 [4],

除了機器翻譯,Seq2seq 也可以用於語音辨識,參考 CTC [5]。

由於 Seq2seq 壓縮成單向量並無法儲存夠多的資訊,後續 Attention 除了把單向量改成多向量,並使用神經網路訓練每個向量的權重 [6]。ConvS2S 則是在 Attention 與 RCTM 的基礎上,把 Attention 分解成 QKV,Query、Key、Value 分別儲存下一個字的預測、索引值、文字的真實含意。Transformer 則是進一步在 encoder 與 decoder 端分別訓練 QKV,因而在機器翻譯取得了巨大的成功 [8],以 Transformer 的 encoder 為基礎,設計的 BERT 也成為自然語言處理 NLP 的標準 [9]。

-----

一、基礎學習與論文理解(70%)。

◎ 1. 可以從這篇論文學到什麼(解決什麼問題)?

# 只要一分钟,机器翻译了解一下 | 知智一分钟 - 知乎

https://zhuanlan.zhihu.com/p/44396098

-----

◎ A. 問題原因。

◎ 1.a.1:過往這個領域已經做到甚麼程度?

# Seq2seq [1]。

-----

-----

◎ 1.a.2:最近的研究遇到了甚麼瓶頸?

# RCTM [4]。

失去字的順序。

-----

# ConvS2S [7]。

-----

◎ 1.a.3:議題發生的根本原因是甚麼?

# The Star Also Rises: [翻譯] Understanding LSTM Networks

http://hemingwang.blogspot.com/2019/09/understanding-lstm-networks.html

-----

◎ B. 解決方法。

◎ 1.b.1:作者採用甚麼方式解決?

# Seq2seq [1]。

-----

◎ 1.b.2:細節內容是如何解決的?

The Star Also Rises: NLP(二):Seq2seq [9]。

https://hemingwang.blogspot.com/2019/09/seq2seq.html

-----

◎ 1.b.3:(optional)- 作者是否有說明思路?

# Rerank [1]。

-----

- 或是後續研究者的討論?

# Attention 作者對 Seq2seq 的討論 [6]。

-----

-----

1. This neural machine translation approach typically consists of two components, the first of which encodes a source sentence x and the second decodes to a target sentence y.

2. Adding neural components to existing translation systems, for instance, to score the phrase pairs in the phrase table (Cho et al., 2014a) or to re-rank candidate translations (Sutskever et al., 2014), has allowed to surpass the previous state-of-the-art performance level.

-----

◎ C. 效能評估。

◎ 1.c.1:成果效能的比較。

# Seq2seq [1]。

-----

◎ 1.c.2:目前這個方法是否還有限制,是甚麼?

https://lilianweng.github.io/lil-log/2018/06/24/attention-attention.html

[8]。

-----

◎ 1.c.3:(optional)- 作者對後續發展的期許?

# Seq2seq [1]

-----

◎ Most importantly, we demonstrated that a simple, straightforward and a relatively unoptimized approach can outperform a mature SMT system, so further work will likely lead to even greater translation accuracies. These results suggest that our approach will likely do well on other challenging sequence to sequence problems.

-----

- 其他研究者後續的發展?

-----

二、後續發展與延伸應用(30%)

◎ 2. 可以應用在那些垂直領域(應用領域)?

# Speech Recognition [5]。

-----

◎ 3. 這篇論文的價值在哪(如何跨領域延伸應用)?

# 從零開始的 Sequence to Sequence | 雷德麥的藏書閣

http://zake7749.github.io/2017/09/28/Sequence-to-Sequence-tutorial/

-----

◎ 4. 如果要改進可以怎麼做(後續的研究)?

# Attention [6]。

-----

References

◎ 主要論文

[1] Seq2seq。被引用 12676 次。

Sutskever, Ilya, Oriol Vinyals, and Quoc V. Le. "Sequence to sequence learning with neural networks." Advances in neural information processing systems. 2014.

http://papers.nips.cc/paper/5346-sequence-to-sequence-learning-with-neural-networks.pdf

-----

◎ 延伸論文

[2] RNN encoder-decoder。與 Seq2seq 時間與成果都接近的論文。被引用 11284 次。

Cho, Kyunghyun, et al. "Learning phrase representations using RNN encoder-decoder for statistical machine translation." arXiv preprint arXiv:1406.1078 (2014).

https://arxiv.org/pdf/1406.1078.pdf

[3] RNN encoder-decoder 應用。被引用 3105 次。

Cho, Kyunghyun, et al. "On the properties of neural machine translation: Encoder-decoder approaches." arXiv preprint arXiv:1409.1259 (2014).

https://arxiv.org/pdf/1409.1259.pdf

[4] RCTM。Seq2seq 主要參考論文。被引用 1137 次。

Kalchbrenner, Nal, and Phil Blunsom. "Recurrent continuous translation models." Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing. 2013.

https://www.aclweb.org/anthology/D13-1176.pdf

[5] CTC。Connectionist Temporal Classification。Speech Recognition。被引用 2535 次。

Graves, Alex, et al. "Connectionist temporal classification: labelling unsegmented sequence data with recurrent neural networks." Proceedings of the 23rd international conference on Machine learning. 2006.

http://axon.cs.byu.edu/~martinez/classes/778/Papers/p369-graves.pdf

[6] Attention。Seq2seq 此 Encoder-Decoder 架構的改進。被引用 14826 次。

Bahdanau, Dzmitry, Kyunghyun Cho, and Yoshua Bengio. "Neural machine translation by jointly learning to align and translate." arXiv preprint arXiv:1409.0473 (2014).

https://arxiv.org/pdf/1409.0473.pdf

[7] ConvS2S。RCTM 的改進。被引用 1772 次。

Gehring, Jonas, et al. "Convolutional sequence to sequence learning." arXiv preprint arXiv:1705.03122 (2017).

https://arxiv.org/pdf/1705.03122.pdf

[8] Transformer。Encoder-Decoder 架構的極致。被引用 13554 次。

Vaswani, Ashish, et al. "Attention is all you need." Advances in neural information processing systems. 2017.

https://papers.nips.cc/paper/7181-attention-is-all-you-need.pdf

[9] BERT。基於 Transformer 的 encoder 設計的 NLP 預訓練模型。被引用 11157 次。

Devlin, Jacob, et al. "Bert: Pre-training of deep bidirectional transformers for language understanding." arXiv preprint arXiv:1810.04805 (2018).

https://arxiv.org/pdf/1810.04805.pdf

-----

◎ 網路文章

[10] The Star Also Rises: BLEU

http://hemingwang.blogspot.com/2020/11/bleu.html

[11] The Star Also Rises: [翻譯] Word Level English to Marathi Neural Machine Translation using Encoder-Decoder Model

http://hemingwang.blogspot.com/2019/10/word-level-english-to-marathi-neural.html

[12] The Star Also Rises: NLP(二):Seq2seq

https://hemingwang.blogspot.com/2019/09/seq2seq.html

-----

# 助教:銘文。

-----

No comments:

Post a Comment