NLP(一):LSTM

2019/09/06

說明:

Recurrent Neural Network (RNN) 跟 Long Short-Term Memory (LSTM) [1]-[3]

都是用來處理時間序列的訊號,譬如 Audio、Speech、Language [4], [5]。由於 RNN 有梯度消失與梯度爆炸的問題,所以

LSTM 被開發出來取代 RNN。由於本質上的缺陷(不能使用 GPU 平行加速),所以雖然 NLP 原本使用 LSTM、GRU

等開發出來的語言模型如 Seq2seq、Attention 等,最後也捨棄了 RNN 系列,而改用全連接層為主的

Transformer,並且取得很好的成果 [8]-[10]。即便如此,還是有更新的 RNN 模型譬如 MGU、SRU 被提出 [11]。

-----

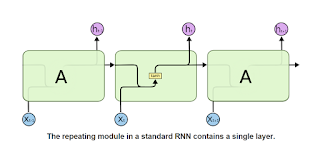

Fig. 1. RNN, [1].

-----

-----

Recurrent Neural Networks and LSTM explained - purnasai gudikandula - Medium

-----

Fig. 3.1b. BPTT algorithm, p. 243, [14].

-----

-----

Recurrent Neural Networks and LSTM explained - purnasai gudikandula - Medium

-----

Recurrent Neural Networks and LSTM explained - purnasai gudikandula - Medium

-----

-----

Fig. 2. LSTM, [1].

-----

// Recurrent Neural Networks and LSTM explained - purnasai gudikandula - Medium

-----

Understanding LSTM and its diagrams - ML Review - Medium

-----

重點在於三個 sigmoid 產生控制訊號。以及兩個 tanh 用來壓縮資料。

Optimizing Recurrent Neural Networks in cuDNN 5

Optimizing Recurrent Neural Networks in cuDNN 5

-----

Fig. 14. Peephole connections [1].

-----

-----

Fig. 15. Coupled forget and input gates [1].

-----

-----

Fig. 16. GRU [1].

-----

-----

-----

// Why LSTM cannot prevent gradient exploding - Cecile Liu - Medium

-----

References

◎ 論文

# LSTM(Long Short-Term Memory)

Hochreiter, Sepp, and Jürgen Schmidhuber. "Long short-term memory." Neural computation 9.8 (1997): 1735-1780.

http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.676.4320&rep=rep1&type=pdf-----

◎ 英文參考資料

Understanding LSTM Networks -- colah's blog

http://colah.github.io/posts/2015-08-Understanding-LSTMs/

The Unreasonable Effectiveness of Recurrent Neural Networks

http://karpathy.github.io/2015/05/21/rnn-effectiveness/

# 10.8K claps

The fall of RNN _ LSTM – Towards Data Science

https://towardsdatascience.com/the-fall-of-rnn-lstm-2d1594c74ce0

-----

Written Memories Understanding, Deriving and Extending the LSTM - R2RT

https://r2rt.com/written-memories-understanding-deriving-and-extending-the-lstm.html

Neural Network Zoo Prequel Cells and Layers - The Asimov Institute

https://www.asimovinstitute.org/neural-network-zoo-prequel-cells-layers/

-----

# 10.1K claps

[] Illustrated Guide to LSTM’s and GRU’s A step by step explanation

https://towardsdatascience.com/illustrated-guide-to-lstms-and-gru-s-a-step-by-step-explanation-44e9eb85bf21

# 7.8K claps

[] Understanding LSTM and its diagrams - ML Review - Medium

https://medium.com/mlreview/understanding-lstm-and-its-diagrams-37e2f46f1714

# 386 claps

[] The magic of LSTM neural networks - DataThings - Medium

https://medium.com/datathings/the-magic-of-lstm-neural-networks-6775e8b540cd

# 247 claps

[] Recurrent Neural Networks and LSTM explained - purnasai gudikandula - Medium

https://medium.com/@purnasaigudikandula/recurrent-neural-networks-and-lstm-explained-7f51c7f6bbb9

# 113 claps

[] A deeper understanding of NNets (Part 3) — LSTM and GRU

https://medium.com/@godricglow/a-deeper-understanding-of-nnets-part-3-lstm-and-gru-e557468acb04

# 58 claps

[] Basic understanding of LSTM - Good Audience

https://blog.goodaudience.com/basic-understanding-of-lstm-539f3b013f1e

Optimizing Recurrent Neural Networks in cuDNN 5

https://devblogs.nvidia.com/optimizing-recurrent-neural-networks-cudnn-5/

-----

◎ 簡體中文參考資料

# 梯度消失 梯度爆炸

[] 三次简化一张图:一招理解LSTM_GRU门控机制 _ 机器之心

https://www.jiqizhixin.com/articles/2018-12-18-12

# 梯度消失 梯度爆炸

[] 长短期记忆(LSTM)-tensorflow代码实现 - Jason160918的博客 - CSDN博客

https://blog.csdn.net/Jason160918/article/details/78295423

[] 周志华等提出 RNN 可解释性方法,看看 RNN 内部都干了些什么 _ 机器之心

https://www.jiqizhixin.com/articles/110404

-----

◎ 繁體中文參考資料

[] 遞歸神經網路和長短期記憶模型 RNN & LSTM · 資料科學・機器・人

https://brohrer.mcknote.com/zh-Hant/how_machine_learning_works/how_rnns_lstm_work.html

# 593 claps

[] 淺談遞歸神經網路 (RNN) 與長短期記憶模型 (LSTM) - TengYuan Chang - Medium

https://medium.com/@tengyuanchang/%E6%B7%BA%E8%AB%87%E9%81%9E%E6%AD%B8%E7%A5%9E%E7%B6%93%E7%B6%B2%E8%B7%AF-rnn-%E8%88%87%E9%95%B7%E7%9F%AD%E6%9C%9F%E8%A8%98%E6%86%B6%E6%A8%A1%E5%9E%8B-lstm-300cbe5efcc3

# 405 claps

[] 速記AI課程-深度學習入門(二) - Gimi Kao - Medium

https://medium.com/@baubibi/%E9%80%9F%E8%A8%98ai%E8%AA%B2%E7%A8%8B-%E6%B7%B1%E5%BA%A6%E5%AD%B8%E7%BF%92%E5%85%A5%E9%96%80-%E4%BA%8C-954b0e473d7f

Why LSTM cannot prevent gradient exploding - Cecile Liu - Medium

https://medium.com/@CecileLiu/why-lstm-cannot-prevent-gradient-exploding-17fd52c4d772

[翻譯] Understanding LSTM Networks

https://hemingwang.blogspot.com/2019/09/understanding-lstm-networks.html

-----

[] 深入淺出 Deep Learning(三):RNN (LSTM)

http://hemingwang.blogspot.com/2018/02/airnnlstmin-120-mins.html

[] AI從頭學(一九):Recurrent Neural Network

http://hemingwang.blogspot.com/2017/03/airecurrent-neural-network.html

-----

◎ 代碼實作

[] Sequence Models and Long-Short Term Memory Networks — PyTorch Tutorials 1.2.0 documentation

https://pytorch.org/tutorials/beginner/nlp/sequence_models_tutorial.html

Predict Stock Prices Using RNN Part 1

https://lilianweng.github.io/lil-log/2017/07/08/predict-stock-prices-using-RNN-part-1.html

Predict Stock Prices Using RNN Part 2

https://lilianweng.github.io/lil-log/2017/07/22/predict-stock-prices-using-RNN-part-2.html

# 230 claps

[] [Keras] 利用Keras建構LSTM模型,以Stock Prediction 為例 1 - PJ Wang - Medium

https://medium.com/@daniel820710/%E5%88%A9%E7%94%A8keras%E5%BB%BA%E6%A7%8Blstm%E6%A8%A1%E5%9E%8B-%E4%BB%A5stock-prediction-%E7%82%BA%E4%BE%8B-1-67456e0a0b

# 38 claps

[] LSTM_深度學習_股價預測 - Data Scientists Playground - Medium

https://medium.com/data-scientists-playground/lstm-%E6%B7%B1%E5%BA%A6%E5%AD%B8%E7%BF%92-%E8%82%A1%E5%83%B9%E9%A0%90%E6%B8%AC-cd72af64413a

No comments:

Post a Comment