Softmax

2020/07/23

-----

Softmax

https://pojenlai.wordpress.com/2016/02/27/tensorflow%E8%AA%B2%E7%A8%8B%E7%AD%86%E8%A8%98-softmax%E5%AF%A6%E4%BD%9C/

-----

Thursday, July 23, 2020

WordRank

WordRank

2020/07/20

-----

References

Ji, Shihao, et al. "Wordrank: Learning word embeddings via robust ranking." arXiv preprint arXiv:1506.02761 (2015).

https://arxiv.org/pdf/1506.02761.pdf

WordRank embedding: “crowned” is most similar to “king”, not word2vec’s “Canute” | RARE Technologies

https://rare-technologies.com/wordrank-embedding-crowned-is-most-similar-to-king-not-word2vecs-canute/

2020/07/20

-----

References

Ji, Shihao, et al. "Wordrank: Learning word embeddings via robust ranking." arXiv preprint arXiv:1506.02761 (2015).

https://arxiv.org/pdf/1506.02761.pdf

WordRank embedding: “crowned” is most similar to “king”, not word2vec’s “Canute” | RARE Technologies

https://rare-technologies.com/wordrank-embedding-crowned-is-most-similar-to-king-not-word2vecs-canute/

fastText v2

fastText v2

2020/07/20

-----

References

Bojanowski, Piotr, et al. "Enriching word vectors with subword information." Transactions of the Association for Computational Linguistics 5 (2017): 135-146.

https://www.mitpressjournals.org/doi/pdfplus/10.1162/tacl_a_00051

Word Embedding Papers | 经典再读之fastText | 机器之心

https://www.jiqizhixin.com/articles/2020-07-03-14

【NLP论文笔记】Enriching word vectors with subword information(FastText词向量) - 简书

https://www.jianshu.com/p/e49b4777a068

2020/07/20

-----

References

Bojanowski, Piotr, et al. "Enriching word vectors with subword information." Transactions of the Association for Computational Linguistics 5 (2017): 135-146.

https://www.mitpressjournals.org/doi/pdfplus/10.1162/tacl_a_00051

Word Embedding Papers | 经典再读之fastText | 机器之心

https://www.jiqizhixin.com/articles/2020-07-03-14

【NLP论文笔记】Enriching word vectors with subword information(FastText词向量) - 简书

https://www.jianshu.com/p/e49b4777a068

fastText v1

fastText v1

2020/07/20

-----

References

Word Embedding Papers | 经典再读之fastText | 机器之心

https://www.jiqizhixin.com/articles/2020-07-03-14

笔记: Bag of Tricks for Efficient Text Classification

https://www.paperweekly.site/papers/notes/132

2020/07/20

-----

References

Word Embedding Papers | 经典再读之fastText | 机器之心

https://www.jiqizhixin.com/articles/2020-07-03-14

笔记: Bag of Tricks for Efficient Text Classification

https://www.paperweekly.site/papers/notes/132

GloVe

GloVe

2020/07/20

-----

https://pixabay.com/zh/photos/boxing-gloves-sport-pink-glove-415394/

-----

https://towardsdatascience.com/word-embeddings-for-nlp-5b72991e01d4

-----

References

◎ 英文

NLP — Word Embedding & GloVe. BERT is a major milestone in creating… | by Jonathan Hui | Medium

https://medium.com/@jonathan_hui/nlp-word-embedding-glove-5e7f523999f6

Word Vectors in Natural Language Processing: Global Vectors (GloVe) | by Sciforce | Sciforce | Medium

https://medium.com/sciforce/word-vectors-in-natural-language-processing-global-vectors-glove-51339db89639

NLP and Deep Learning All-in-One Part II: Word2vec, GloVe, and fastText | by Bruce Yang | Medium

https://medium.com/@bruceyanghy/nlp-and-deep-learning-all-in-one-part-ii-word2vec-glove-and-fasttext-184bd03a7ba

What is GloVe?. GloVe stands for global vectors for… | by Japneet Singh Chawla | Analytics Vidhya | Medium

https://medium.com/analytics-vidhya/word-vectorization-using-glove-76919685ee0b

Intuitive Guide to Understanding GloVe Embeddings | by Thushan Ganegedara | Towards Data Science

https://towardsdatascience.com/light-on-math-ml-intuitive-guide-to-understanding-glove-embeddings-b13b4f19c010

Word Embeddings for NLP. Understanding word embeddings and their… | by Renu Khandelwal | Towards Data Science

https://towardsdatascience.com/word-embeddings-for-nlp-5b72991e01d4

-----

◎ 簡中

GloVe详解 | 范永勇

http://www.fanyeong.com/2018/02/19/glove-in-detail/

GloVe:另一种Word Embedding方法

https://pengfoo.com/post/machine-learning/2017-04-11

CS224N NLP with Deep Learning(三):词向量之GloVe - 知乎

https://zhuanlan.zhihu.com/p/50543888

NLP︱高级词向量表达(一)——GloVe(理论、相关测评结果、R&python实现、相关应用)_素质云笔记/Recorder...-CSDN博客_glove

https://blog.csdn.net/sinat_26917383/article/details/54847240

2.8 GloVe词向量-深度学习第五课《序列模型》-Stanford吴恩达教授_Jichao Zhao的博客-CSDN博客_glove单词表示的全局变量

https://blog.csdn.net/weixin_36815313/article/details/106633339

四步理解GloVe!(附代码实现) - 知乎

https://zhuanlan.zhihu.com/p/79573970

2020/07/20

-----

https://pixabay.com/zh/photos/boxing-gloves-sport-pink-glove-415394/

-----

https://towardsdatascience.com/word-embeddings-for-nlp-5b72991e01d4

-----

References

◎ 英文

NLP — Word Embedding & GloVe. BERT is a major milestone in creating… | by Jonathan Hui | Medium

https://medium.com/@jonathan_hui/nlp-word-embedding-glove-5e7f523999f6

Word Vectors in Natural Language Processing: Global Vectors (GloVe) | by Sciforce | Sciforce | Medium

https://medium.com/sciforce/word-vectors-in-natural-language-processing-global-vectors-glove-51339db89639

NLP and Deep Learning All-in-One Part II: Word2vec, GloVe, and fastText | by Bruce Yang | Medium

https://medium.com/@bruceyanghy/nlp-and-deep-learning-all-in-one-part-ii-word2vec-glove-and-fasttext-184bd03a7ba

What is GloVe?. GloVe stands for global vectors for… | by Japneet Singh Chawla | Analytics Vidhya | Medium

https://medium.com/analytics-vidhya/word-vectorization-using-glove-76919685ee0b

Intuitive Guide to Understanding GloVe Embeddings | by Thushan Ganegedara | Towards Data Science

https://towardsdatascience.com/light-on-math-ml-intuitive-guide-to-understanding-glove-embeddings-b13b4f19c010

Word Embeddings for NLP. Understanding word embeddings and their… | by Renu Khandelwal | Towards Data Science

https://towardsdatascience.com/word-embeddings-for-nlp-5b72991e01d4

-----

◎ 簡中

GloVe详解 | 范永勇

http://www.fanyeong.com/2018/02/19/glove-in-detail/

GloVe:另一种Word Embedding方法

https://pengfoo.com/post/machine-learning/2017-04-11

CS224N NLP with Deep Learning(三):词向量之GloVe - 知乎

https://zhuanlan.zhihu.com/p/50543888

NLP︱高级词向量表达(一)——GloVe(理论、相关测评结果、R&python实现、相关应用)_素质云笔记/Recorder...-CSDN博客_glove

https://blog.csdn.net/sinat_26917383/article/details/54847240

2.8 GloVe词向量-深度学习第五课《序列模型》-Stanford吴恩达教授_Jichao Zhao的博客-CSDN博客_glove单词表示的全局变量

https://blog.csdn.net/weixin_36815313/article/details/106633339

四步理解GloVe!(附代码实现) - 知乎

https://zhuanlan.zhihu.com/p/79573970

LSA

LSA(Latent Semantic Analysis)

2020/07/22

-----

TF-IDF

https://towardsdatascience.com/latent-semantic-analysis-distributional-semantics-in-nlp-ea84bf686b50

-----

TF-IDF

https://medium.com/nanonets/topic-modeling-with-lsa-psla-lda-and-lda2vec-555ff65b0b05

-----

LSA1

https://www.analyticsvidhya.com/blog/2018/10/stepwise-guide-topic-modeling-latent-semantic-analysis/

-----

LSA2

https://www.analyticsvidhya.com/blog/2018/10/stepwise-guide-topic-modeling-latent-semantic-analysis/

-----

Term Co-occurrence Matrix

https://towardsdatascience.com/latent-semantic-analysis-deduce-the-hidden-topic-from-the-document-f360e8c0614b

-----

SVD

https://blog.csdn.net/weixin_42398658/article/details/85088130

-----

SVD

https://www.jianshu.com/p/9fe0a7004560

-----

SVD and LSA

https://blog.csdn.net/callejon/article/details/49811819

-----

2020/07/22

-----

TF-IDF

https://towardsdatascience.com/latent-semantic-analysis-distributional-semantics-in-nlp-ea84bf686b50

-----

TF-IDF

https://medium.com/nanonets/topic-modeling-with-lsa-psla-lda-and-lda2vec-555ff65b0b05

-----

LSA1

https://www.analyticsvidhya.com/blog/2018/10/stepwise-guide-topic-modeling-latent-semantic-analysis/

-----

LSA2

https://www.analyticsvidhya.com/blog/2018/10/stepwise-guide-topic-modeling-latent-semantic-analysis/

-----

Term Co-occurrence Matrix

https://towardsdatascience.com/latent-semantic-analysis-deduce-the-hidden-topic-from-the-document-f360e8c0614b

-----

SVD

https://blog.csdn.net/weixin_42398658/article/details/85088130

-----

SVD

https://www.jianshu.com/p/9fe0a7004560

-----

SVD and LSA

https://blog.csdn.net/callejon/article/details/49811819

-----

Saturday, July 18, 2020

RNNLM

RNNLM

2020/07/18

-----

-----

-----

References

RNNLM

Mikolov, Tomáš, Martin Karafiát, and Lukáš Burget. "Jan ˇCernocky, and Sanjeev Khudanpur. 2010. Recurrent neural network based language model." Eleventh annual conference of the international speech communication association. 2010.

https://www.fit.vutbr.cz/research/groups/speech/publi/2010/mikolov_interspeech2010_IS100722.pdf

RNNLM Extention

Mikolov, Tomáš, et al. "Extensions of recurrent neural network language model." 2011 IEEE international conference on acoustics, speech and signal processing (ICASSP). IEEE, 2011.

https://pdfs.semanticscholar.org/bba8/a2c9b9121e7c78e91ea2a68630e77c0ad20f.pdf

RNNLM Toolkit

Mikolov, Tomas, et al. "Rnnlm-recurrent neural network language modeling toolkit." Proc. of the 2011 ASRU Workshop. 2011.

http://www.fit.vutbr.cz/~imikolov/rnnlm/rnnlm-demo.pdf

2020/07/18

-----

-----

-----

References

RNNLM

Mikolov, Tomáš, Martin Karafiát, and Lukáš Burget. "Jan ˇCernocky, and Sanjeev Khudanpur. 2010. Recurrent neural network based language model." Eleventh annual conference of the international speech communication association. 2010.

https://www.fit.vutbr.cz/research/groups/speech/publi/2010/mikolov_interspeech2010_IS100722.pdf

RNNLM Extention

Mikolov, Tomáš, et al. "Extensions of recurrent neural network language model." 2011 IEEE international conference on acoustics, speech and signal processing (ICASSP). IEEE, 2011.

https://pdfs.semanticscholar.org/bba8/a2c9b9121e7c78e91ea2a68630e77c0ad20f.pdf

RNNLM Toolkit

Mikolov, Tomas, et al. "Rnnlm-recurrent neural network language modeling toolkit." Proc. of the 2011 ASRU Workshop. 2011.

http://www.fit.vutbr.cz/~imikolov/rnnlm/rnnlm-demo.pdf

C&W

C&W

2020/07/18

-----

-----

-----

-----

-----

-----

-----

References

// 英文資料

C&W

Collobert, Ronan, et al. "Natural language processing (almost) from scratch." Journal of machine learning research 12.ARTICLE (2011): 2493-2537.

http://www.jmlr.org/papers/volume12/collobert11a/collobert11a.pdf

// 英文投影片

NLP from scratch

https://www.slideshare.net/bryanzhanghang/nlp-from-scratch

// 英文投影片

PPT - Natural Language Processing PowerPoint Presentation, free download - ID:5753691

https://www.slideserve.com/bisa/natural-language-processing/

-----

// 日文資料

// 日文投影片

Natural Language Processing (Almost) from Scratch(第 6 回 Deep Learning…

https://www.slideshare.net/alembert2000/deep-learning-6

-----

// 簡中資料

读论文《Natural Language Processing from Scratch》 - 知乎

https://zhuanlan.zhihu.com/p/59744800

2020/07/18

-----

-----

-----

-----

-----

-----

-----

References

// 英文資料

C&W

Collobert, Ronan, et al. "Natural language processing (almost) from scratch." Journal of machine learning research 12.ARTICLE (2011): 2493-2537.

http://www.jmlr.org/papers/volume12/collobert11a/collobert11a.pdf

// 英文投影片

NLP from scratch

https://www.slideshare.net/bryanzhanghang/nlp-from-scratch

// 英文投影片

PPT - Natural Language Processing PowerPoint Presentation, free download - ID:5753691

https://www.slideserve.com/bisa/natural-language-processing/

-----

// 日文資料

// 日文投影片

Natural Language Processing (Almost) from Scratch(第 6 回 Deep Learning…

https://www.slideshare.net/alembert2000/deep-learning-6

-----

// 簡中資料

读论文《Natural Language Processing from Scratch》 - 知乎

https://zhuanlan.zhihu.com/p/59744800

Wednesday, July 15, 2020

全方位 AI 課程(精華篇)

全方位 AI 課程(精華篇)

2020/01/01

-----

Fig. Start(圖片來源:Pixabay)。

-----

Outline

一、LeNet

二、LeNet Python Lab

三、NIN

四、ResNet

五、FCN

六、YOLOv1

七、LSTM

八、Seq2seq

九、Attention

一0、ConvS2S

一一、Transformer

一二、BERT

一三、Weight Decay

一四、Dropout

一五、Batch Normalization

一六、Layer Nirmalization

一七、Adam

一八、Lookahead

-----

// Amazon.com:《Python Programming An Introduction to Computer Science》第三版。 (9781590282755) John Zelle Books

-----

// Amazon.com:Advanced Engineering Mathematics, 10Th Ed, Isv (9788126554232) Erwin Kreyszig Books

-----

// Amazon.com:Discrete - Time Signal Processing (9789332535039) Oppenheim Schafer Books

-----

// History of Deep Learning

-----

// History of Deep Learning

-----

// Deep Learning Paper

-----

// Deep Learning Paper

-----

// Deep Learning Paper

-----

// Recent Advances in CNN

-----

-----

-----

◎ LeNet

-----

-----

-----

-----

-----

-----

// 奇異值分解 (SVD) _ 線代啟示錄

-----

// Activation function 到底怎麼影響模型? - Dream Maker

-----

// Activation function 到底怎麼影響模型? - Dream Maker

-----

-----

-----

-----

-----

-----

◎ NIN

-----

-----

-----

◎ SENet

-----

# SENet

-----

◎ ResNet

-----

// DNN tip

-----

# ResNet v1

-----

-----

# ResNet-D

-----

# ResNet v2

-----

# ResNet-E

-----

# ResNet-V

-----

◎ FCN

-----

# FCN

-----

# FCN

-----

-----

◎ YOLOv1

-----

# YOLO v1

-----

# YOLO v1

-----

# YOLO v1

-----

◎ YOLOv3

-----

// Sensors _ Free Full-Text _ Improved UAV Opium Poppy Detection Using an Updated YOLOv3 Model _ HTML

-----

◎ LSTM

-----

-----

-----

◎ Seq2seq

-----

-----

◎ Attention

-----

-----

// Attention and Memory in Deep Learning and NLP – WildML

-----

◎ ConvS2S

-----

-----

-----

◎ Transformer

-----

// Attention Attention!

-----

-----

-----

-----

-----

-----

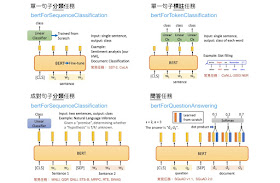

◎ BERT

-----

# BERT

-----

GPT-1

-----

// LeeMeng - 直觀理解 GPT-2 語言模型並生成金庸武俠小說

-----

# GPT-1

-----

ELMo

-----

// Learn how to build powerful contextual word embeddings with ELMo

-----

BERT

-----

// LeeMeng - 進擊的 BERT:NLP 界的巨人之力與遷移學習

-----

// LeeMeng - 進擊的 BERT:NLP 界的巨人之力與遷移學習

-----

// LeeMeng - 進擊的 BERT:NLP 界的巨人之力與遷移學習

-----

◎ Weight Decay

-----

// DNN tip

-----

-----

◎ Dropout

-----

// Dropout, DropConnect, and Maxout Mechanism Network. _ Download Scientific Diagram

-----

◎ Batch Normalization

-----

# PN

-----

// [ML筆記] Batch Normalization

-----

// An Intuitive Explanation of Why Batch Normalization Really Works (Normalization in Deep Learning Part 1) _ Machine Learning Explained

-----

# BN

-----

◎ Layer Normalization

-----

// 你是怎样看待刚刚出炉的 Layer Normalisation 的? - 知乎

-----

// Weight Normalization and Layer Normalization Explained (Normalization in Deep Learning Part 2) _ Machine Learning Explained

-----

◎ Adam

-----

// SGD算法比较 – Slinuxer

-----

◎ Lookahead

-----

// Lookahead Optimizer k steps forward, 1 step back - YouTube

-----

-----

-----

References

AI 三部曲(深度學習:從入門到精通)

https://hemingwang.blogspot.com/2019/05/trilogy.html

-----

Amazon.com:《Python Programming An Introduction to Computer Science》第三版。 (9781590282755) John Zelle Books

https://www.amazon.com/-/zh_TW/dp/1590282752/

Amazon.com:Advanced Engineering Mathematics, 10Th Ed, Isv (9788126554232) Erwin Kreyszig Books

https://www.amazon.com/-/zh_TW/dp/8126554231/

Amazon.com:Discrete - Time Signal Processing (9789332535039) Oppenheim Schafer Books

https://www.amazon.com/-/zh_TW/dp/9332535035/

-----

History of Deep Learning

Deep Learning Paper

Recent Advances in CNN

-----

奇異值分解 (SVD) _ 線代啟示錄

https://ccjou.wordpress.com/2009/09/01/%E5%A5%87%E7%95%B0%E5%80%BC%E5%88%86%E8%A7%A3-svd/

Activation function 到底怎麼影響模型? - Dream Maker

https://yuehhua.github.io/2018/07/27/activation-function/

DNN tip

http://speech.ee.ntu.edu.tw/~tlkagk/courses/ML_2016/Lecture/DNN%20tip.pdf

Sensors _ Free Full-Text _ Improved UAV Opium Poppy Detection Using an Updated YOLOv3 Model _ HTML

https://www.mdpi.com/1424-8220/19/22/4851/htm

Attention and Memory in Deep Learning and NLP – WildML

http://www.wildml.com/2016/01/attention-and-memory-in-deep-learning-and-nlp/

Attention Attention!

https://lilianweng.github.io/lil-log/2018/06/24/attention-attention.html

[ML筆記] Batch Normalization

http://violin-tao.blogspot.com/2018/02/ml-batch-normalization.html

An Intuitive Explanation of Why Batch Normalization Really Works (Normalization in Deep Learning Part 1) _ Machine Learning Explained

https://mlexplained.com/2018/01/10/an-intuitive-explanation-of-why-batch-normalization-really-works-normalization-in-deep-learning-part-1/

你是怎样看待刚刚出炉的 Layer Normalisation 的? - 知乎

https://www.zhihu.com/question/48820040

Weight Normalization and Layer Normalization Explained (Normalization in Deep Learning Part 2) _ Machine Learning Explained

https://mlexplained.com/2018/01/13/weight-normalization-and-layer-normalization-explained-normalization-in-deep-learning-part-2/

-----

全方位 AI 課程(六十小時搞定深度學習)

http://hemingwang.blogspot.com/2020/01/all-round-ai-lectures.html

全方位 AI 課程報名處

https://www.facebook.com/permalink.php?story_fbid=113391586856343&id=104808127714689

2020/01/01

-----

Fig. Start(圖片來源:Pixabay)。

-----

Outline

一、LeNet

二、LeNet Python Lab

三、NIN

四、ResNet

五、FCN

六、YOLOv1

七、LSTM

八、Seq2seq

九、Attention

一0、ConvS2S

一一、Transformer

一二、BERT

一三、Weight Decay

一四、Dropout

一五、Batch Normalization

一六、Layer Nirmalization

一七、Adam

一八、Lookahead

-----

// Amazon.com:《Python Programming An Introduction to Computer Science》第三版。 (9781590282755) John Zelle Books

-----

// Amazon.com:Advanced Engineering Mathematics, 10Th Ed, Isv (9788126554232) Erwin Kreyszig Books

-----

// Amazon.com:Discrete - Time Signal Processing (9789332535039) Oppenheim Schafer Books

-----

// History of Deep Learning

-----

// History of Deep Learning

-----

// Deep Learning Paper

-----

// Deep Learning Paper

-----

// Deep Learning Paper

-----

// Recent Advances in CNN

-----

-----

-----

◎ LeNet

-----

-----

-----

-----

-----

-----

// 奇異值分解 (SVD) _ 線代啟示錄

-----

// Activation function 到底怎麼影響模型? - Dream Maker

-----

// Activation function 到底怎麼影響模型? - Dream Maker

-----

-----

-----

-----

-----

-----

◎ NIN

-----

-----

-----

◎ SENet

-----

# SENet

-----

◎ ResNet

-----

// DNN tip

-----

# ResNet v1

-----

-----

# ResNet-D

-----

# ResNet v2

-----

# ResNet-E

-----

# ResNet-V

-----

◎ FCN

-----

# FCN

-----

# FCN

-----

-----

◎ YOLOv1

-----

# YOLO v1

-----

# YOLO v1

-----

# YOLO v1

-----

◎ YOLOv3

-----

// Sensors _ Free Full-Text _ Improved UAV Opium Poppy Detection Using an Updated YOLOv3 Model _ HTML

-----

◎ LSTM

-----

-----

-----

◎ Seq2seq

-----

-----

◎ Attention

-----

-----

// Attention and Memory in Deep Learning and NLP – WildML

-----

◎ ConvS2S

-----

-----

-----

◎ Transformer

-----

// Attention Attention!

-----

-----

-----

-----

-----

-----

◎ BERT

-----

# BERT

-----

GPT-1

-----

// LeeMeng - 直觀理解 GPT-2 語言模型並生成金庸武俠小說

-----

# GPT-1

-----

ELMo

-----

// Learn how to build powerful contextual word embeddings with ELMo

-----

BERT

-----

// LeeMeng - 進擊的 BERT:NLP 界的巨人之力與遷移學習

-----

// LeeMeng - 進擊的 BERT:NLP 界的巨人之力與遷移學習

-----

// LeeMeng - 進擊的 BERT:NLP 界的巨人之力與遷移學習

-----

◎ Weight Decay

-----

// DNN tip

-----

# AdamW

-----

◎ Dropout

-----

// Dropout, DropConnect, and Maxout Mechanism Network. _ Download Scientific Diagram

-----

◎ Batch Normalization

-----

# PN

-----

// [ML筆記] Batch Normalization

-----

// An Intuitive Explanation of Why Batch Normalization Really Works (Normalization in Deep Learning Part 1) _ Machine Learning Explained

-----

# BN

-----

◎ Layer Normalization

-----

// 你是怎样看待刚刚出炉的 Layer Normalisation 的? - 知乎

-----

// Weight Normalization and Layer Normalization Explained (Normalization in Deep Learning Part 2) _ Machine Learning Explained

-----

◎ Adam

-----

// SGD算法比较 – Slinuxer

-----

◎ Lookahead

-----

// Lookahead Optimizer k steps forward, 1 step back - YouTube

-----

-----

-----

References

AI 三部曲(深度學習:從入門到精通)

https://hemingwang.blogspot.com/2019/05/trilogy.html

-----

Amazon.com:《Python Programming An Introduction to Computer Science》第三版。 (9781590282755) John Zelle Books

https://www.amazon.com/-/zh_TW/dp/1590282752/

Amazon.com:Advanced Engineering Mathematics, 10Th Ed, Isv (9788126554232) Erwin Kreyszig Books

https://www.amazon.com/-/zh_TW/dp/8126554231/

Amazon.com:Discrete - Time Signal Processing (9789332535039) Oppenheim Schafer Books

https://www.amazon.com/-/zh_TW/dp/9332535035/

-----

History of Deep Learning

Alom, Md Zahangir, et al. "The history began from alexnet: A comprehensive survey on deep learning approaches." arXiv preprint arXiv:1803.01164 (2018).

https://arxiv.org/ftp/arxiv/papers/1803/1803.01164.pdf Deep Learning Paper

LeCun, Yann, Yoshua Bengio, and Geoffrey Hinton. "Deep learning." nature 521.7553 (2015): 436.

https://creativecoding.soe.ucsc.edu/courses/cs523/slides/week3/DeepLearning_LeCun.pdf Recent Advances in CNN

Gu, Jiuxiang, et al. "Recent advances in convolutional neural networks." Pattern Recognition 77 (2018): 354-377.

https://arxiv.org/pdf/1512.07108.pdf-----

奇異值分解 (SVD) _ 線代啟示錄

https://ccjou.wordpress.com/2009/09/01/%E5%A5%87%E7%95%B0%E5%80%BC%E5%88%86%E8%A7%A3-svd/

Activation function 到底怎麼影響模型? - Dream Maker

https://yuehhua.github.io/2018/07/27/activation-function/

DNN tip

http://speech.ee.ntu.edu.tw/~tlkagk/courses/ML_2016/Lecture/DNN%20tip.pdf

Sensors _ Free Full-Text _ Improved UAV Opium Poppy Detection Using an Updated YOLOv3 Model _ HTML

https://www.mdpi.com/1424-8220/19/22/4851/htm

Attention and Memory in Deep Learning and NLP – WildML

http://www.wildml.com/2016/01/attention-and-memory-in-deep-learning-and-nlp/

Attention Attention!

https://lilianweng.github.io/lil-log/2018/06/24/attention-attention.html

[ML筆記] Batch Normalization

http://violin-tao.blogspot.com/2018/02/ml-batch-normalization.html

An Intuitive Explanation of Why Batch Normalization Really Works (Normalization in Deep Learning Part 1) _ Machine Learning Explained

https://mlexplained.com/2018/01/10/an-intuitive-explanation-of-why-batch-normalization-really-works-normalization-in-deep-learning-part-1/

你是怎样看待刚刚出炉的 Layer Normalisation 的? - 知乎

https://www.zhihu.com/question/48820040

Weight Normalization and Layer Normalization Explained (Normalization in Deep Learning Part 2) _ Machine Learning Explained

https://mlexplained.com/2018/01/13/weight-normalization-and-layer-normalization-explained-normalization-in-deep-learning-part-2/

-----

全方位 AI 課程(六十小時搞定深度學習)

http://hemingwang.blogspot.com/2020/01/all-round-ai-lectures.html

全方位 AI 課程報名處

https://www.facebook.com/permalink.php?story_fbid=113391586856343&id=104808127714689