2020/01/01

-----

Fig. Start(圖片來源:Pixabay)。

-----

Outline

一、LeNet

二、LeNet Python Lab

三、NIN

四、ResNet

五、FCN

六、YOLOv1

七、LSTM

八、Seq2seq

九、Attention

一0、ConvS2S

一一、Transformer

一二、BERT

一三、Weight Decay

一四、Dropout

一五、Batch Normalization

一六、Layer Nirmalization

一七、Adam

一八、Lookahead

-----

// Amazon.com:《Python Programming An Introduction to Computer Science》第三版。 (9781590282755) John Zelle Books

-----

// Amazon.com:Advanced Engineering Mathematics, 10Th Ed, Isv (9788126554232) Erwin Kreyszig Books

-----

// Amazon.com:Discrete - Time Signal Processing (9789332535039) Oppenheim Schafer Books

-----

// History of Deep Learning

-----

// History of Deep Learning

-----

// Deep Learning Paper

-----

// Deep Learning Paper

-----

// Deep Learning Paper

-----

// Recent Advances in CNN

-----

-----

-----

◎ LeNet

-----

-----

-----

-----

-----

-----

// 奇異值分解 (SVD) _ 線代啟示錄

-----

// Activation function 到底怎麼影響模型? - Dream Maker

-----

// Activation function 到底怎麼影響模型? - Dream Maker

-----

-----

-----

-----

-----

-----

◎ NIN

-----

-----

-----

◎ SENet

-----

# SENet

-----

◎ ResNet

-----

// DNN tip

-----

# ResNet v1

-----

-----

# ResNet-D

-----

# ResNet v2

-----

# ResNet-E

-----

# ResNet-V

-----

◎ FCN

-----

# FCN

-----

# FCN

-----

-----

◎ YOLOv1

-----

# YOLO v1

-----

# YOLO v1

-----

# YOLO v1

-----

◎ YOLOv3

-----

// Sensors _ Free Full-Text _ Improved UAV Opium Poppy Detection Using an Updated YOLOv3 Model _ HTML

-----

◎ LSTM

-----

-----

-----

◎ Seq2seq

-----

-----

◎ Attention

-----

-----

// Attention and Memory in Deep Learning and NLP – WildML

-----

◎ ConvS2S

-----

-----

-----

◎ Transformer

-----

// Attention Attention!

-----

-----

-----

-----

-----

-----

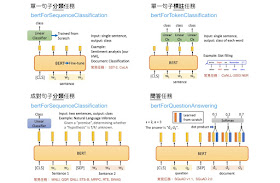

◎ BERT

-----

# BERT

-----

GPT-1

-----

// LeeMeng - 直觀理解 GPT-2 語言模型並生成金庸武俠小說

-----

# GPT-1

-----

ELMo

-----

// Learn how to build powerful contextual word embeddings with ELMo

-----

BERT

-----

// LeeMeng - 進擊的 BERT:NLP 界的巨人之力與遷移學習

-----

// LeeMeng - 進擊的 BERT:NLP 界的巨人之力與遷移學習

-----

// LeeMeng - 進擊的 BERT:NLP 界的巨人之力與遷移學習

-----

◎ Weight Decay

-----

// DNN tip

-----

# AdamW

-----

◎ Dropout

-----

// Dropout, DropConnect, and Maxout Mechanism Network. _ Download Scientific Diagram

-----

◎ Batch Normalization

-----

# PN

-----

// [ML筆記] Batch Normalization

-----

// An Intuitive Explanation of Why Batch Normalization Really Works (Normalization in Deep Learning Part 1) _ Machine Learning Explained

-----

# BN

-----

◎ Layer Normalization

-----

// 你是怎样看待刚刚出炉的 Layer Normalisation 的? - 知乎

-----

// Weight Normalization and Layer Normalization Explained (Normalization in Deep Learning Part 2) _ Machine Learning Explained

-----

◎ Adam

-----

// SGD算法比较 – Slinuxer

-----

◎ Lookahead

-----

// Lookahead Optimizer k steps forward, 1 step back - YouTube

-----

-----

-----

References

AI 三部曲(深度學習:從入門到精通)

https://hemingwang.blogspot.com/2019/05/trilogy.html

-----

Amazon.com:《Python Programming An Introduction to Computer Science》第三版。 (9781590282755) John Zelle Books

https://www.amazon.com/-/zh_TW/dp/1590282752/

Amazon.com:Advanced Engineering Mathematics, 10Th Ed, Isv (9788126554232) Erwin Kreyszig Books

https://www.amazon.com/-/zh_TW/dp/8126554231/

Amazon.com:Discrete - Time Signal Processing (9789332535039) Oppenheim Schafer Books

https://www.amazon.com/-/zh_TW/dp/9332535035/

-----

History of Deep Learning

Alom, Md Zahangir, et al. "The history began from alexnet: A comprehensive survey on deep learning approaches." arXiv preprint arXiv:1803.01164 (2018).

https://arxiv.org/ftp/arxiv/papers/1803/1803.01164.pdf Deep Learning Paper

LeCun, Yann, Yoshua Bengio, and Geoffrey Hinton. "Deep learning." nature 521.7553 (2015): 436.

https://creativecoding.soe.ucsc.edu/courses/cs523/slides/week3/DeepLearning_LeCun.pdf Recent Advances in CNN

Gu, Jiuxiang, et al. "Recent advances in convolutional neural networks." Pattern Recognition 77 (2018): 354-377.

https://arxiv.org/pdf/1512.07108.pdf-----

奇異值分解 (SVD) _ 線代啟示錄

https://ccjou.wordpress.com/2009/09/01/%E5%A5%87%E7%95%B0%E5%80%BC%E5%88%86%E8%A7%A3-svd/

Activation function 到底怎麼影響模型? - Dream Maker

https://yuehhua.github.io/2018/07/27/activation-function/

DNN tip

http://speech.ee.ntu.edu.tw/~tlkagk/courses/ML_2016/Lecture/DNN%20tip.pdf

Sensors _ Free Full-Text _ Improved UAV Opium Poppy Detection Using an Updated YOLOv3 Model _ HTML

https://www.mdpi.com/1424-8220/19/22/4851/htm

Attention and Memory in Deep Learning and NLP – WildML

http://www.wildml.com/2016/01/attention-and-memory-in-deep-learning-and-nlp/

Attention Attention!

https://lilianweng.github.io/lil-log/2018/06/24/attention-attention.html

[ML筆記] Batch Normalization

http://violin-tao.blogspot.com/2018/02/ml-batch-normalization.html

An Intuitive Explanation of Why Batch Normalization Really Works (Normalization in Deep Learning Part 1) _ Machine Learning Explained

https://mlexplained.com/2018/01/10/an-intuitive-explanation-of-why-batch-normalization-really-works-normalization-in-deep-learning-part-1/

你是怎样看待刚刚出炉的 Layer Normalisation 的? - 知乎

https://www.zhihu.com/question/48820040

Weight Normalization and Layer Normalization Explained (Normalization in Deep Learning Part 2) _ Machine Learning Explained

https://mlexplained.com/2018/01/13/weight-normalization-and-layer-normalization-explained-normalization-in-deep-learning-part-2/

-----

全方位 AI 課程(六十小時搞定深度學習)

http://hemingwang.blogspot.com/2020/01/all-round-ai-lectures.html

全方位 AI 課程報名處

https://www.facebook.com/permalink.php?story_fbid=113391586856343&id=104808127714689

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.