2019/12/02

-----

// Overview of different Optimizers for neural networks

-----

// An Overview on Optimization Algorithms in Deep Learning 1 - Taihong Xiao

-----

# Nadam

-----

// Stochastic Gradient Descent - Deep Learning#g - Medium

-----

References

# SGD

Bottou, Léon. "Stochastic gradient descent tricks." Neural networks: Tricks of the trade. Springer, Berlin, Heidelberg, 2012. 421-436.

https://www.microsoft.com/en-us/research/wp-content/uploads/2012/01/tricks-2012.pdf # Nadam

Dozat, Timothy. "Incorporating nesterov momentum into adam." (2016).

https://openreview.net/pdf?id=OM0jvwB8jIp57ZJjtNEZ -----

Overview of different Optimizers for neural networks

https://medium.com/datadriveninvestor/overview-of-different-optimizers-for-neural-networks-e0ed119440c3

An Overview on Optimization Algorithms in Deep Learning 1 - Taihong Xiao

https://prinsphield.github.io/posts/2016/02/overview_opt_alg_deep_learning1/

Stochastic Gradient Descent - Deep Learning#g - Medium

https://medium.com/deep-learning-g/stochastic-gradient-descent-63a155ba3975

-----

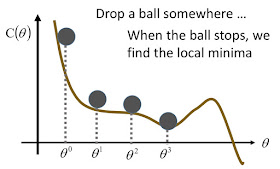

SGD(1) — for non-convex functions – Ang's learning notes

https://angnotes.wordpress.com/2018/08/19/sgd1-for-non-convex-functions/

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.