2019/12/02

-----

// Overview of different Optimizers for neural networks

-----

// An Overview on Optimization Algorithms in Deep Learning 2 - Taihong Xiao

-----

// Deep Learning 最优化方法之AdaGrad - 知乎

-----

References

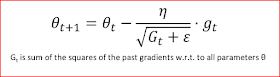

# AdaGrad

Duchi, John, Elad Hazan, and Yoram Singer. "Adaptive subgradient methods for online learning and stochastic optimization." Journal of Machine Learning Research 12.Jul (2011): 2121-2159.

http://www.jmlr.org/papers/volume12/duchi11a/duchi11a.pdf -----

Overview of different Optimizers for neural networks

https://medium.com/datadriveninvestor/overview-of-different-optimizers-for-neural-networks-e0ed119440c3

An Overview on Optimization Algorithms in Deep Learning 2 - Taihong Xiao

https://prinsphield.github.io/posts/2016/02/overview_opt_alg_deep_learning2/

-----

Deep Learning 最优化方法之AdaGrad - 知乎

https://zhuanlan.zhihu.com/p/29920135

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.