2019/01/17

-----

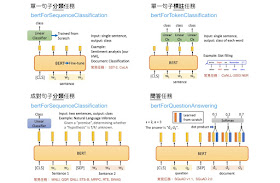

Fig. BERT(圖片來源:Pixabay)。

-----

# BERT

-----

// LeeMeng - 進擊的 BERT:NLP 界的巨人之力與遷移學習

-----

// LeeMeng - 進擊的 BERT:NLP 界的巨人之力與遷移學習

-----

// LeeMeng - 進擊的 BERT:NLP 界的巨人之力與遷移學習

-----

// Add Shine to your Data Science Resume with these 8 Ambitious Projects on GitHub

-----

References

[1] BERT

Devlin, Jacob, et al. "Bert: Pre-training of deep bidirectional transformers for language understanding." arXiv preprint arXiv:1810.04805 (2018).

https://arxiv.org/pdf/1810.04805.pdf-----

// English

# Illistrated

The Illustrated BERT, ELMo, and co. (How NLP Cracked Transfer Learning) – Jay Alammar – Visualizing machine learning one concept at a time

http://jalammar.github.io/illustrated-bert/

Dissecting BERT Part 1 The Encoder – Dissecting BERT – Medium

https://medium.com/dissecting-bert/dissecting-bert-part-1-d3c3d495cdb3

Dissecting BERT Part 2 BERT Specifics – Dissecting BERT – Medium

https://medium.com/dissecting-bert/dissecting-bert-part2-335ff2ed9c73

Dissecting BERT Appendix The Decoder – Dissecting BERT – Medium

https://medium.com/dissecting-bert/dissecting-bert-appendix-the-decoder-3b86f66b0e5f

BERT Explained State of the art language model for NLP

https://towardsdatascience.com/bert-explained-state-of-the-art-language-model-for-nlp-f8b21a9b6270

How BERT leverage attention mechanism and transformer to learn word contextual relations

https://towardsdatascience.com/how-bert-leverage-attention-mechanism-and-transformer-to-learn-word-contextual-relations-5bbee1b6dbdb

Deconstructing BERT Distilling 6 Patterns from 100 Million Parameters

https://www.kdnuggets.com/2019/02/deconstructing-bert-distilling-patterns-100-million-parameters.html

Are BERT Features InterBERTible

https://www.kdnuggets.com/2019/02/bert-features-interbertible.html

Add Shine to your Data Science Resume with these 8 Ambitious Projects on GitHub

https://www.nekxmusic.com/add-shine-to-your-data-science-resume-with-these-8-ambitious-projects-on-github/

-----

// 簡體中文

NLP自然语言处理:文本表示总结 - 下篇(ELMo、Transformer、GPT、BERT)_陈宸的博客-CSDN博客

https://blog.csdn.net/qq_35883464/article/details/100173045

Attention isn’t all you need!BERT的力量之源远不止注意力 - 知乎

https://zhuanlan.zhihu.com/p/58430637

-----

LeeMeng - 進擊的 BERT:NLP 界的巨人之力與遷移學習

https://leemeng.tw/attack_on_bert_transfer_learning_in_nlp.html

-----

// Code

# PyTorch

GitHub - huggingface_pytorch-pretrained-BERT 📖The Big-&-Extending-Repository-of-Transformers Pretrained PyTorch models for Google's BERT, OpenAI GPT & GPT-2, Google_CMU Transformer-XL

https://github.com/huggingface/pytorch-pretrained-BERT

# PyTorch

GitHub - codertimo_BERT-pytorch Google AI 2018 BERT pytorch implementation

https://github.com/codertimo/BERT-pytorch

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.